How Can You Use Data In The Classroom To Meaningfully Affect Learning?

by Terry Heick

What are some simply challenges of data-based teaching? Two easy ones are a lack of planning time by teachers to create assessments and use the data yielded by those assessments and the reality that many assessments are not designed for teachers or students. That is, they produce anything of real value to the teaching and learning process.

Note: If you don’t already have a plan for the data before giving the assessment, you’re already behind. Among the challenges of assessment, this concept–as it applies to formal academic classrooms designed to promote mastery of academic standards–is near the top.

Without a direct input into your instructional design embedded within a dynamic curriculum map, an assessment is just a hurdle for the student–one they might clear, or one that might trip them up–and more work for you.

And let’s talk about how much we (as teachers) like to jump hurdles for others.

This is the third time in as many weeks that I’ve written about assessment, which usually means there’s something that’s bothering me and I can’t figure out what. In Evolving How We Plan, I pointed contentiously at the ‘unit’ and ‘lesson’ as impediments to personalized learning.

Simply put, most planning templates in most schools used by most teachers on most days don’t allow for data to be easily absorbed. They’re not designed for students, they’re designed for curriculum. Their audience isn’t students or communities, but rather administrators and colleagues.

These are industrial documents.

Depending on what grade level and content area you teach, and how your curriculum is packaged, what you should and are reasonably able to do with data might be different. But put roughly, teachers administer quizzes and exams, and do their best to ‘re-teach.’ Even in high-functioning professional learning communities, teachers are behind before they give their first test.

Their teaching just isn’t ready for the data.

What Should Assessments ‘Do’?

In The Most Important Question Every Assessment Should Answer, I outlined one of the biggest of the many big ideas that revolve around tests, quizzes, and other snapshots of understanding–information. In short (depending on the assessment form, purpose, context, type, etc.), the primary function of assessment in a dynamic learning environment is to provide data to revise planned instruction. It tells you where to go next.

Unfortunately, they’re not always used this way, even when they are. Instead, they’re high drama that students ‘pass’ or ‘fail.’ They’re matters of professional learning communities and artifacts for ‘data teams.’ They’re designed to function but instead just parade about and make a spectacle of themselves.

Within PLCs and data teams, the goal is to establish a standardized process to incrementally improve teaching and learning, but the minutiae and processes within these teaching improvement tools can center themselves over the job they’re supposed to be doing. We learn to ‘become proficient’ at PLCs and data teams the same way students ‘become proficient’ at taking tests. Which is crazy and backward and no wonder education hates innovation.

To teach a student, you have to know what they do and don’t know. What they can or can’t do. ‘They’ doesn’t refer to the class either, but the student. That student–what do they seem to know? How did you measure, and how much do you trust that measurement? This is fundamental, and in an academic institution, more or less ‘true.’

Yet, the constructivist model is not compatible with many existing educational forms and structures. Constructivism, depending as it does on the learner’s own knowledge creation over time through reflection and iteration–seems to resist modern assessment forms that seek to pop in, take a snapshot, and pop back out.

These snapshots are taken with no ‘frames’ waiting for them within the lesson or unit; they’re just grades and measurements, with little hope of substantively changing how and when students learn what.

Teachers As Learning Designers

There is the matter of teaching practice working behind the scenes here. What teachers believe, and how those beliefs inform their practice, including assessment design and data management.

In Classroom Assessment Practices and Teachers’ Self-Perceived Assessment Skills, Zhicheng Zhang and Judith A. Burry-Stock separate “assessment practices and assessment skills,” explaining that they “are related but have different constructs. Whereas the former pertains to assessment activities, the latter reflects an individual’s perception of his or her skill level in conducting those activities. This may explain why teachers rated their assessment skills as good even though they were found inadequately prepared to conduct classroom assessment in several areas.”

Assessment design can’t exist independently from instructional design or curriculum design.

In The Inconvenient Truths of Assessment, I said that “It’s an extraordinary amount of work to design precise and personalized assessments that illuminate pathways forward for individual students–likely too much for one teacher to do so consistently for every student.” This is such a challenge not because personalizing learning is hard, but personalizing learning is hard when you use traditional units (e.g., genre-based units in English-Language Arts) and basic learning models (e.g., direct instruction, basic grouping, maybe some tiering, etc.)

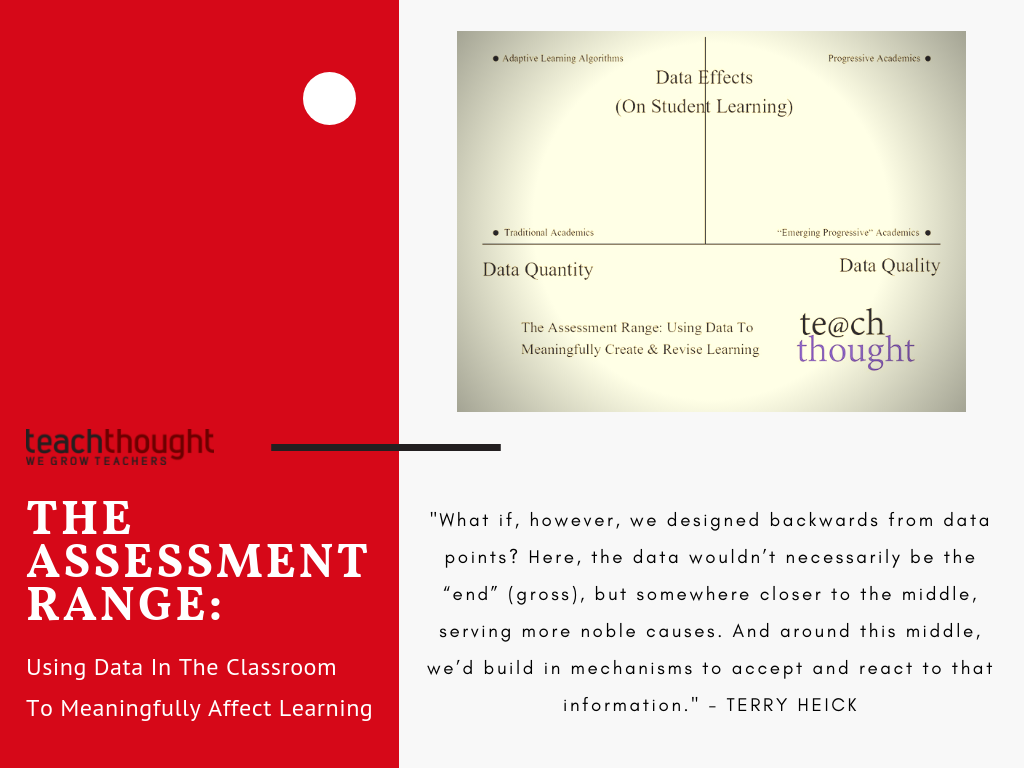

Change the tools, and you can change the machine; change the machine, and you can change the tools. The question then can be asked: How can we design learning along chronological (time) and conceptual (content) boundaries so that that learning requires that data create itself? Adaptive learning algorithms within certain #edtech products are coded along these lines. So how do we do this face-to-face, nose-to-book, pen-to-hand?

If we insist on using a data-based and researched-grounded ed reform model, this is crucial, no?

Backward Planning Of A Different Kind

Assessments are data creation tools. Why collect the data if it’s not going to be used? This is all very simple: Don’t give an assessment unless the data is actually going to change future learning for *that* student.

Think about what an assessment can do. Give the student a chance to show what they know. Act as a microscope for you to examine what they seem to understand. Make the student feel good or bad. Motivate or demotivate the student. De-authenticate an otherwise authentic learning experience.

Think about what you can do with assessment data as a teacher. Report it to others. Assign an arbitrary alphanumeric symbol in hopes that it symbolizes-student-achievement-but-can-we-really-agree-what-that-means-anyway? Spin it to colleagues or parents or students. Overreact to it. Misunderstand it. Ignore it. Use it to make you feel good or bad about your own teaching–like you’re ‘holding students accountable’ with the ‘bar high,’ or like no matter what you do, it’s still not enough.

Grant Wiggins (whose work I often gush over) and colleague Jay McTighe are known for their Understanding by Design template, a model that depends on the idea of backward-design. That is, when we design learning, we begin with the end in mind. These ‘ends’ are usually matters of understanding–I want students to know this, be able to write or solve this, etc.

What if, however, we designed backward from data points? Here, the data wouldn’t necessarily be the ‘end’ but somewhere closer to the middle, serving more noble causes. And around this middle, we’d build in mechanisms to accept and react to that information.

We’d have a system that expected a certain amount of ‘proficiency’ and ‘non-proficiency.’ Two weeks into the ‘unit’ (if we insist on using units), we’re waiting on the very crucial data from a small series of diverse assessments (maybe non-threatening assessments?) so that we know what to do and where to go next. We already have a plan for it before we even start. We’re ready to use data to substantively, elegantly, and humanly revise what we had planned. We can’t move on without this data, or else we’re just being ridiculous.

We keep the conveyors running while the bottles crash off the belts all around us.

adapted image attribution flickr user vancouverfilmschool