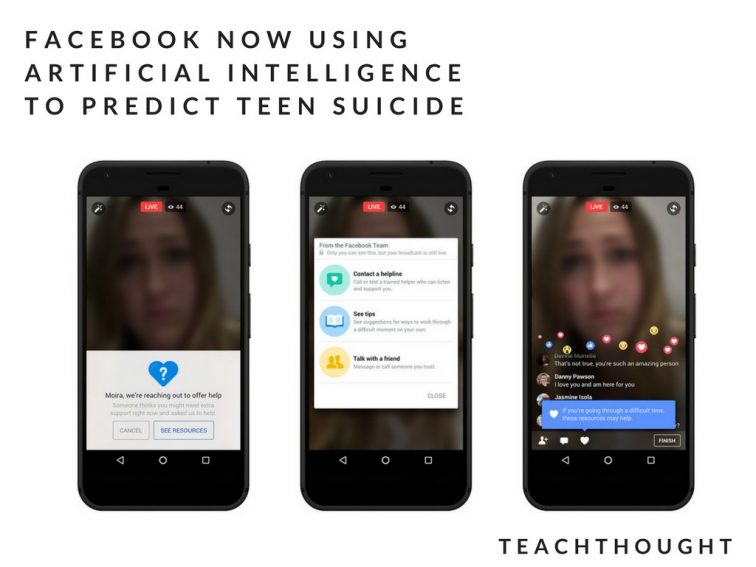

Facebook Now Using AI To Predict Teen Suicide

by TeachThought Staff

On November 27, 2017, Mark Zuckerberg announced plans—through a Facebook post—to use an emerging technology (Artificial Intelligence) to help predict and prevent teen suicide.

Social media, bullying, identity, and mental health are increasingly tied together. The suicide rate for white females aged 10-14 has tripled since 1990, emphasizing a clear need for tools, strategies, and support for the growing problem.

(No word on if the technology will eventually be extended to adults; according to the CDC, in 2011 middle-aged adults accounted for the largest demographic of suicides at 56%, an increase of 30% between 1990 and 2010.)

Zuckerberg began his post by explaining the benefits that have already been seen while clarifying that the use of artificial intelligence for emotional and mental health signaling is in its infancy. (He also may have alluded to very public concerns expressed tech-expert Elon Musk about the safety of artificial intelligence.)

Here’s a good use of AI: helping prevent suicide.

“Starting today we’re upgrading our AI tools to identify when someone is expressing thoughts about suicide on Facebook so we can help get them the support they need quickly. In the last month alone, these AI tools have helped us connect with first responders quickly more than 100 times.

With all the fear about how AI may be harmful in the future, it’s good to remind ourselves how AI is actually helping save people’s lives today.

There’s a lot more we can do to improve this further. Today, these AI tools mostly use pattern recognition to identify signals — like comments asking if someone is okay — and then quickly report them to our teams working 24/7 around the world to get people help within minutes. In the future, AI will be able to understand more of the subtle nuances of language, and will be able to identify different issues beyond suicide as well, including quickly spotting more kinds of bullying and hate.

Suicide is one of the leading causes of death for young people, and this is a new approach to prevention. We’re going to keep working closely with our partners at Save.org, National Suicide Prevention Lifeline ‘1-800-273-TALK (8255)’, Forefront Suicide Prevent, and with first responders to keep improving. If we can use AI to help people be there for their family and friends, that’s an important and positive step forward.”

Facebook & The Future Of Monitoring

Since its launch in February of 2005, Facebook has faced criticisms of data, privacy, and identity security.

And while the purpose of the technology use in this case seems difficult to fault, some have decried the use of artificial intelligence to prevent suicide as impersonal, and an invasion of privacy to boot. There is currently no way to opt-out of the artificial intelligence-based analysis, nor is it clear how—or if—human beings will play a role in the process.

In 2016, Facebook rolled out tools to make reporting suicide easier and already has a team of 4500 employees to monitor Facebook Live video streams for violent or suicidal occurrences. In March of 2017, the California-based company announced plans to add another 3,000 employees to that team, though in the future this kind of work could be ‘performed by’ artificial intelligence as well.

Facebook Begins Using Artificial Intelligence To Predict Teen Suicide