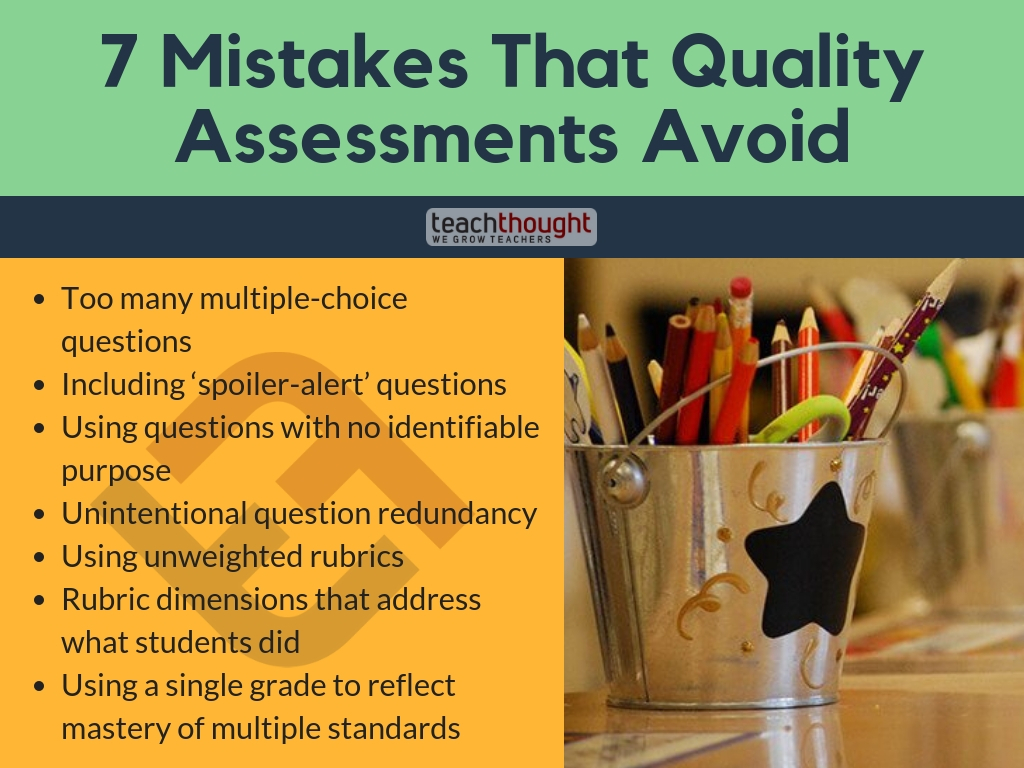

What Mistakes Do Quality Assessments Avoid?

contributed by Daniel R. Venables, Founding Director of the Center for Authentic PLCs

Giving a test is easy; assessing understanding is not.

What are the most common mistakes quality assessments avoid? The following list of items are things best avoided in designing quality assessments.

1. Too many multiple-choice questions

Sure, they are easy to correct and reduce the time it takes teachers to correct several classes of tests, but they generally reveal very little about a student’s knowledge or understanding if she gets a question wrong. (This is true also for a question a student answered correctly in so far as we have no idea if the student’s answer was a random guess.)

To know how a student was thinking or where her confusion might lie, we need questions that give her a chance to say more than ‘B.’ More, in the words of the late, great educator Dr. Ted Sizer, “How can we assess if a student is using her mind well if we ask her questions that have answers to choose from?”

See also, The Problem With Multiple Choice Questions by Terry Heick.

2. Including ‘spoilers.’

By these, I mean questions that tell the student how to answer the question in the question itself. For example, on a math test containing a section on applying logarithms, don’t say “Use logs to solve each the following,” instead say “Solve each of the following.”

3. Using questions with no identifiable purpose

It’s so easy for us as educators to put a question on an assessment we’re writing that seemed like a good one but, upon further examination, has no real purpose.

For each question item included on your assessments, ask: What is the purpose of this question? What specific knowledge will I gain about my students’ levels of mastery of a standard or substandard by their answers to this question?

4. Unintentional question redundancy

It may be the case that you’re deliberately asking a question that tests the same skill or understanding at the same level of cognition as another question, but very often we include several question items that may look different or have different window dressing surrounding them that tests the exact understanding or skill as a previous question. Ask yourself: Do I need another question testing this or have I covered this standard sufficiently by previous questions at previous levels of depth?

Remember all the correcting you had to do in #1? Less is more.

5. Using unweighted rubrics

If the assessments you’re designing have a component that is to be scored with a rubric, weight the various dimensions in the rubric according to their significance.

For example, if one dimension is Writing Mechanics and another is Source Citing, decide if these should hold the same weight in the overall grade on this assessment and, if not, weight them accordingly. It is a rare case when it is sensible for every dimension of a rubric to have the same weight. Don’t do so by default because it hadn’t occurred to you to give them different weights.

6. Rubric dimensions that address what students did

The purpose of the rubric is to discern levels of mastery of various standards addressed by the assessment and not a checklist of whether or not the student included things she was supposed to do in the manner you requested.

For example, a rubric dimension Use of Text as Evidence addresses a learning standard or substandard being assessed but a rubric dimension Completion of Portfolio Requirements addresses something the student did and not something the student learned.

Let the rubric reflect students’ level of learning; let a separate checklist denote what she did in the demonstration of that learning. [Bonus tip: For the Checklist, I always include one like “Use of Class Time”]

7. Using a single grade to reflect mastery of multiple standards

This may be a time-honored tradition, but it really makes no sense. We are interested in our students’ mastery against a standard or several standards and in that regard, there should be a separate score/grade assigned for each.

If this is too bold a break from tradition for you and you insist on an overall score for every assessment, avoid thinking in terms of percentages (another time-honored tradition that really makes no sense).

Score the component parts of the assessment as you will and if you’re going to use an overall score, make it one that is sensible based on the amount of mastery evidenced and not on what percentage of the assessment items the student answered correctly.

Daniel R. Venables is Founding Director of the Center for Authentic PLCs and author of How Teachers Can Turn Data Into Action (ASCD, 2014), The Practice of Authentic PLCs: A Guide to Effective Teacher Teams (Corwin, 2011), and Facilitating Authentic PLCs: The Human Side of Leading Teacher Teams (ASCD, forthcoming). He can be contacted at dvenables@authenticplcs.com.

This post is aligned with two themes from Connected Educator Month 2015: “Innovations in Professional Learning,” led by ASCD, and “Innovations in Assessment,” led by NCTE. Click the following links to find ASCD resources for professional learning or assessment; The Mistakes That Quality Assessments Avoid; image attribution flickr us nickamostcato